AWS S3 CLI Commands

Please use the menu below to navigate the article sections:

AWS S3 is one of the most widely used AWS cloud service. You will hardly find any cloud-native application that is not actively using S3 for hosting files. Today we will focus on the use of CLI for managing S3 buckets and files. We will go through different commands and parameters for interacting with S3 buckets through the AWS CLI tool. Let’s start with the basics of S3, how it works and how you can make use of the AWS CLI tool to rapidly manage your S3 buckets and their objects.

AWS S3 Commands

Amazon S3 is AWS’s object store and has been an essential AWS service from the start. Amazon S3 has built-in scalability, durability, and fault tolerance. It can store trillion objects with a peak load measured in millions of requests in a second. The service is based on a pay-as-you-go model. The AWS S3 CLI commands allow you to manage your S3-hosted files and buckets in an easy and efficient manner.

AWS CLI is an open-source, command line tool through which you can interact with all parts of AWS S3 files, permissions, policies, buckets, static file hosting, etc. These AWS S3 CLI commands are similar to standard network copy tools like scp or rsync. S3 CLI commands are used to copy, view, and delete Amazon S3 buckets and objects within these buckets. The CLI tool supports all the key features required for smooth operations with Amazon S3 buckets.

The AWS CLI also provides the AWS S3API commands, which exhibit more of the unique features of Amazon S3 and provides access to bucket metadata, including S3 bucket lifecycle policies.

You can use AWS S3 CLI to perform different operations like:

- Multipart parallelized uploads

- Integration with AWS IAM users and roles

- Management of S3 buckets metadata

- Encryption of S3 buckets/objects

- Bucket policies

- Setting permissions

- Add/edit/remove objects from buckets

- Add/edit/remove buckets

- Secure the files’ access through pre-signed URL’s

- Copy, sync, and move objects between buckets

List of AWS S3 CLI Commands

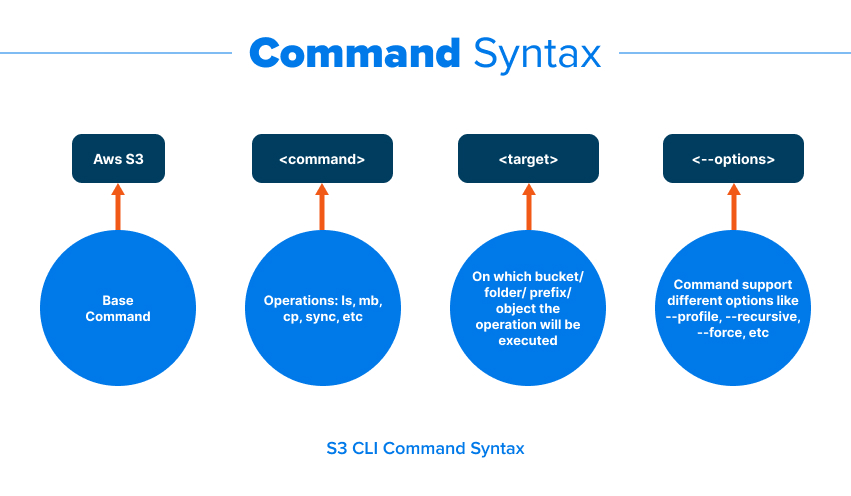

Note that you will need to download, install, and configure the AWS CLI before using S3 commands. The main prefix for all the commands will be AWS S3. Some of the important parameters and switches for AWS S3 are:

- The cp parameter initiates a copy operation to or from Amazon S3 buckets.

- The —quiet parameter ensures that the AWS CLI for Amazon S3 displays only errors instead of a line for each file copied.

- The —recursive option instructs the S3 CLI to operate recursively into subdirectories on the source.

- The Linux time command is used to get statistics on how long the S3 command execution tool.

Let’s go through some of the most important S3 CLI commands.

- Create new bucket

$ aws s3api create-bucket –bucket test-bucket –region us-east-1

{

“Location”: “/test-bucket”

}

You can use the below syntax for creating a bucket too. It achieves the same purpose but uses a different command:

aws s3 mb s3://my-test-bucket-for-johncarter

Note that the bucket name should be universally unique. Also, if you do not specify any region, then the bucket will be created in the default region set by your CLI configuration. To explicitly create a bucket in a particular region, use the –region parameter as shown below:

aws s3 mb s3://my-test-bucket-for-johncarter –region us-west-1

- Copy file from local file system to S3 bucket:

$ aws s3 cp samplefile.txt s3://my-test-bucket-for-johncarter

- Copy the whole folder from the local file system to S3 bucket:

$ aws s3 cp /data s3://my-test-bucket-for-johncarter –recursive

- Download a file from S3 bucket:

$ aws s3 cp s3://my-test-bucket-for-johncarter/samplefile.txt

- Copy a file from one bucket to another bucket. Note that you should have permission on both the buckets.

$ aws s3 cp s3://my-test-bucket-for-johncarter/staging/configuration.xml s3://Johncarternackupbucket

- Move a file from S3 bucket to your local file system

$ aws s3 mv s3://my-test-bucket-for-johncarter/configuration.xml /home/project

Note that the above command will remove the file from S3 bucket so it is a cut operation instead of copy.

- Delete all files from S3 bucket

$ aws s3 rm s3://my-test-bucket-for-johncarter –recursive

This command will remove all the files present in the bucket.

- Delete a particular file from the bucket:

aws s3 rm s3://my-test-bucket-for-johncarter/configuration.xml

- Delete all files from a bucket except some particular files. The below command will remove all files from the bucket except the xml files

aws s3 rm s3://my-test-bucket-for-johncarter –recursive –exclude “*.xml”

- Sync the files between your local file system to S3 bucket. It will recursively copy only the new or updated files from the local directory to the destination S3 bucket.

$ aws s3 sync /backupfolder s3://my-test-bucket-for-johncarter

- Sync all files from S3 bucket to your local file system

$ aws s3 sync s3://my-test-bucket-for-johncarter/backup /localfolder/backup

- You can also use the sync command to sync the files from one S3 bucket to another S3 bucket.

$ aws s3 sync s3://my-test-bucket-for-johncarter s3://backup-bucket

- Host a static website on S3 bucket. You will need to mention both the index and error document.

aws s3 website s3://my-test-bucket-for-johncarter/ –index-document index.html –error-document error.html

This bucket is in us-east-2 region. So, after executing the above command, you can access the my-test-bucket-for-johncarter as a static website using the following URL: http://my-test-bucket-for-johncarter.s3-website-us-east-2.amazonaws.com/

You also need to ensure that you set the public access on this S3 bucket so that it acts as a public-facing website.

- Pre-sign the URL of S3 objects for temporary access

When you pre-sign a URL for an S3 file, anyone accessing the URL can retrieve the S3 file with an HTTP GET request. For example, if you want to give someone temporary access to the configuration.xml file, just pre-sign this specific S3 object as below.

$ aws s3 presign s3://my-test-bucket-for-johncarter/ configuration.xml

By default, the above URL will be valid for 3600 seconds (1 hour). If you want to specify a shorter expiry time, use the parameter of expires-in. The following command will create a pre-signed URL that is valid only for 60 seconds.

$ aws s3 presign s3://my-test-bucket-for-johncarter/configuration.xml –expires-in 60

If someone tries to access this file after 1 minute has passed, then they will see an error message below:

<Error>

<Code>AccessDenied</Code>

<Message>Request has expired</Message>

<Expires>2022-04-07T11:38:12Z</Expires>

<ServerTime>2022-04-07T11:38:21Z</ServerTime>

<RequestId>45654343434</RequestId>

<HostId>

VM-4343543434

</HostId>

</Error>

- Remove an S3 bucket

$ aws s3 rb s3://my-test-bucket-for-johncarter

The above command will remove the bucket. Note that the bucket must be empty if you want to remove it. If it has any objects, then it will throw an error. To forcibly remove a bucket having objects, use the — force parameter as below:

$ aws s3 rb s3://my-test-bucket-for-johncarter –force

- Encrypt existing S3 object using CLI

You can copy a single object back to its encrypted form with default encryption provided by AWS i.e. SSE-S3 (server-side encryption with Amazon S3-managed keys):

aws s3 cp s3://my-test-bucket-for-johncartert/testfile s3://awsexamplebucket/testfile –sse AES256

AWS S3 List Buckets

You can use the S3 CLI to interact with S3 buckets in many ways. You can list all the buckets in your account. You can use CLI to list different objects from a specific bucket. You can move data between different buckets.

Find below some of the CLI commands through which you can list and manage your S3 buckets using AWS CLI:

- List all the buckets in your AWS account.

$ aws s3 ls

- Display the summary of the total size and number of objects in the bucket:

$ aws s3 ls s3://my-test-bucket-for-johncarter-responsive-website-serverless-application –summarize

- To view the objects in an S3 bucket in a human-readable form, use the following command:

aws s3 ls s3://my-test-bucket-for-johncarter /staging/ –recursive –human-readable –summarize

Mastering S3 Commands

Today we discussed the AWS S3 CLI commands in detail. S3 is an essential cloud service used by many cloud-native applications today for hosting images, videos, and other binary files. We showed you different ways of interacting with S3 buckets and how to perform different operations on S3 buckets.

Just like any other AWS service, the best way to get your expertise on AWS S3 is through earning an AWS certification. In particular, the AWS Solutions Architect Associate and AWS Sysops Admin are very helpful in understanding how S3 buckets work, especially through the AWS CLI commands.

Learn how to Master the AWS Cloud

Elevate your cloud skills with our comprehensive AWS training. Our platform offers a range of training options including:

- Membership – Join our monthly or annual membership program for unlimited access to our on-demand training library.

- Hands-on Challenge Labs – Learn by doing in a safe sandbox environment. Develop your cloud skills without risking unexpected cloud bills.

- AWS Training – Our in-depth AWS training will give you the best shot at passing your certification exam on the first attempt.

Responses